Deadlocks, Starvation, Livelock and Race Conditions

If I had an hour to solve a problem I’d spend 55 minutes thinking about the problem and 5 minutes thinking about solutions. – Albert Einstein

Deadlocks, starvation, and race conditions are the bane of multithreaded programming and they can bring down any system in which they occur.

Defining the Problem(s)

Deadlock – Occurs when two competing processes are waiting for each other to finish, allowing neither to finish.

Starvation – Occurs when a process never gains accesses to resources, never allowing the program to finish.

Race Conditions – Occurs when processes that must occur in a particular order occur out of order due to multiple threading. More specifically, this is discussing a data race, please avoid arguments such as this one.

Livelock – Occurs when two threads are dependent on each other signals and are both threads respond to each others signals. If this is the case, the threads can cause a loop similar to something between a deadlock and starvation.

Deadlock

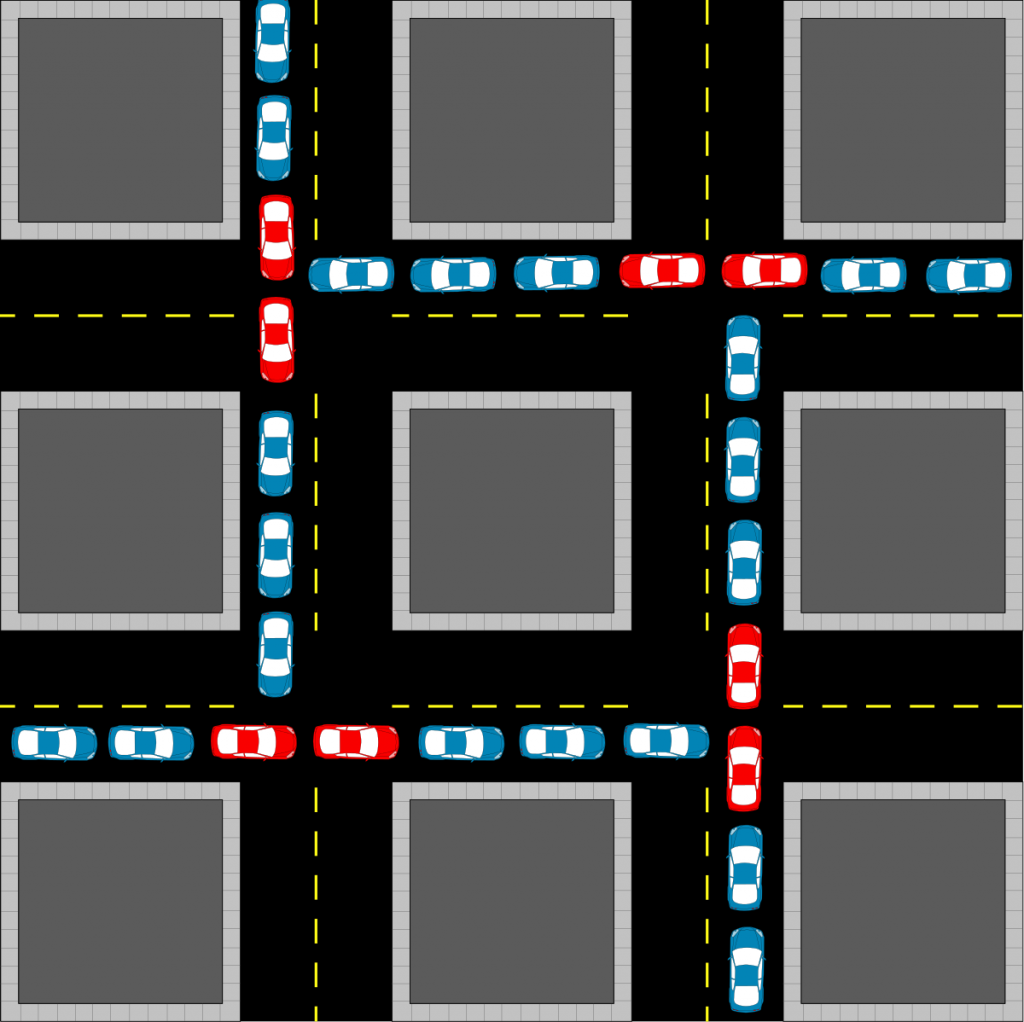

I have on occasion heard the analogy that deadlock occurs in multithreading the same way gridlock occurs in traffic.

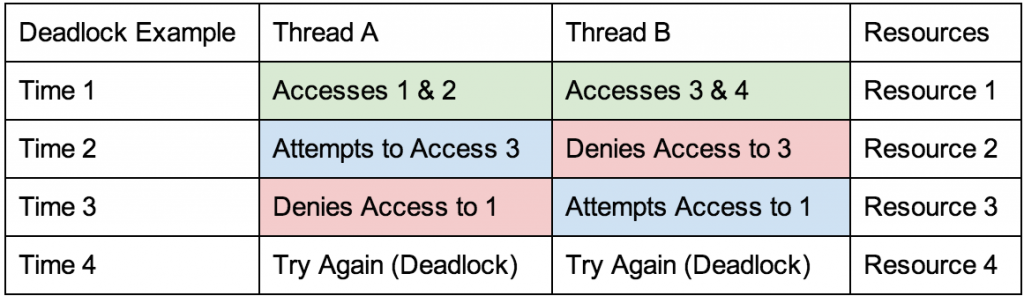

Essentially, one thread, say thread A, attempts to complete a process (hogging some resources). Another thread, thread B, attempts to use resources from A to complete it’s process, but cannot. A cannot complete it’s process until recourses from B are released and thus we have a deadlock. The table below is representative of a deadlock situation:

Each thread in the example needs resources 1, 2, 3, and 4 to complete, but only has access to two, and neither thread will give up their resources. Similar to the traffic gridlock example, there is no way out unless something changes. In programming we can use signaling (such as interprocess communication i.e. pipes), a mutex or semaphores (with timesharing/timeouts) to break deadlocks.

Starvation

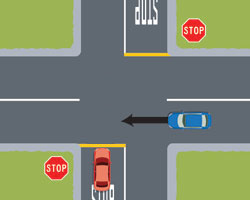

Starvation is exactly what it sounds like, a process is quite literally “starved” never gaining recourses and in turn no progress is made. A real life example could occur at a two way stop sign, where two parallel lanes do not contain stop signs, but the two adjacent do. The way only way this would occur is if there a never ending stream of cars, but for this example that is the case.

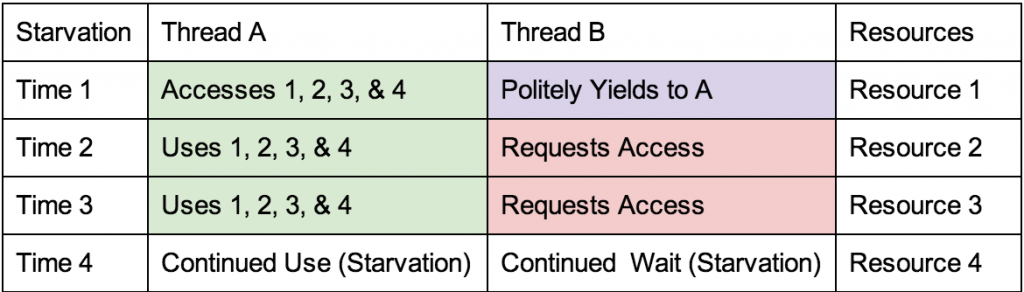

In the example, so long as cars are driving from either left to right or right to left there is no way for the red car to cross the street. This is the same issue processes face upon starvation. The table below represents code in which thread B always yields to thread A and thread A never releases the resources so long as they are in use (and in this case suppose they are always in use).

In the table thread A is constantly using all of the resources, never yielding them to thread B, thereby starving thread B. A simple solution to this problem is to create a mutex and share the resources based on time or some other factor which occurs. The caveat being, if the resources are not shared based on time, they must be shared based on some event(s) which avoid starvation.

Race Conditions

Race conditions, usually refers to a data race, in which two threads simultaneously have access to a critical section, which is a section of code which contains a process which is required to be atomic. Data races are some times difficult to determine in multithreaded programs because a program can often run correctly one, two, or even hundreds of times without ever running into a problem. However, if a program does run into a data race condition it can be disastrous, often leading to deadlock or starvation.

An real world example would be two cars and one intersection. The intersection is representative of the critical section in which only one car (or rather lane of cars) should have access to at a given time.

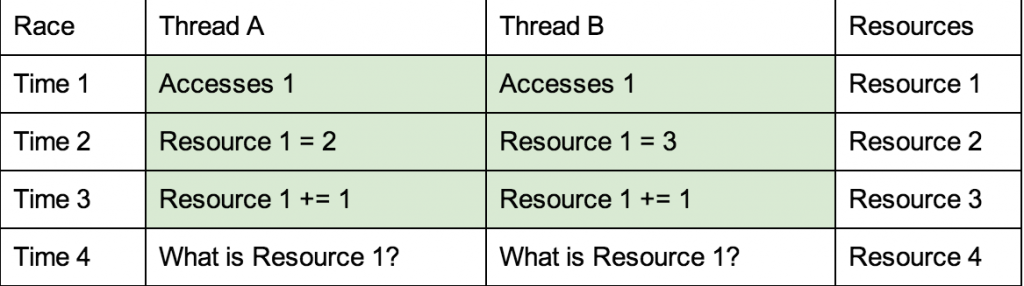

In the real world this happens fairly often, one car attempts to gain access to the intersection and one runs a red light, BAM (deadlock)! In programming this can occur if we do not ensure our critical sections are atomic (Definition of Atomic: forming a single irreducible unit or component in a larger system). In other words, we must use a mutex to protect our critical section of code and only allow one thread access to atomic variables at a given time. If we code without protecting our critical sections we can have the following occur:

In this case, because Thread A and Thread B are running simultaneously and we have no way of knowing (without a mutex) which thread changed resource one at any given time we have no clue what resource 1 currently is. Possible outcomes include:

- Resource 1 = 3 (Thread A finishes before B starts)

- Resource 1 = 4 (Thread B finishes before A starts)

- Resource 1 = 5 (A: Resource 1 = 2; B: Resource 1 = 3; A: Recourse 1 += 1; B: Resource 1 += 1)

- There are also other ways to get to 3 or 4

The point being (in this case) we needed to ensure that only one thread has access to a given resource at a given time.

Note that we do not always need to worry about protecting against race conditions. In many cases, such as this Producer-Consumer example, we do not care the order in which the orders are served. We do however ensure that the “count” of how many orders are currently ready, but not served is protected from a race condition by a mutex. This is because the “count” is data which is critical, the order is not.

Livelock

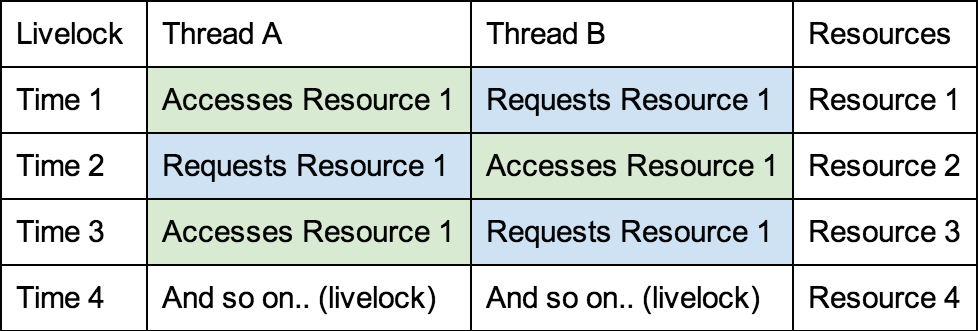

Livelocks – the deadlock with good intentions – are somewhere in-between a deadlock and starvation. Livelocks occur when two threads can communicate (through pipes, variables, or some other method) and both threads react to one another. This usual occurs when both threads relinquish their resources upon any request from another process (and immediately attempt to regain that resource).

Breaking from the car example, imagine two friends are walking together to a coffee shop. When they reach the door one friend stops (friend A), opens the door and offers the other to walk in (friend B). Instead of walking in the friend B tells friend A, “No you.” Friend A then says, “No you.” This is a classic livelock example, where one signal effects the other and no progress is made because neither friend takes initiative.

In programming this happens much the same way, one thread communicates with the other and so on creating the same situation as a deadlock since neither process can complete their execution.

In this program, thread A and B relinquish their resource any time it is requested and since both thread A and B need access to resource 1 no progress is made. A simple (though perhaps naive) solution to this issue is adding a time delay for each request.

I enjoy the read, thanks for the article!

Well written & explained! 🙂 Great article!

Nice article! Threads cannot solve all your problems with performance. They can even introduce new bugs and problems. Hopefully there will be more and more frameworks that ‘automate’ scalability and multithreading.

In C++, std::future and std::async looks promising, for instance. Have you used it? Or maybe similar technique in other languages?

I use OpenMP regularly to simply add some multithreading to my programs. I’ve also used async and future once, it’s pretty nice and I would recommend it. Personally, I still love the POSIX C way of multithreading, for me I enjoy the control, but there is more mistakes to be made as well.