Recently, I have been doing some work for my senior thesis/project related to computer vision. One particular thing I found interest, but opted against pursing was determining man made objects. There are already several methods to do this, however I thought of a fun twist that would work for a senior thesis. Although, I decided to go with a different senior thesis project, I hope in sharing this idea it motivates me to complete it or inspires others.

Characteristics of Nature vs Man Made Objects

- Color – In nature plants are green, blue, brown, grey and animals are similarly brown, black, white. This means, colors such as red, purple, orange, and yellow are not likely to be present. There are edge cases such as leaves changing colors, flowers, blood, etc.

- Shape – In nature, lines are rarely strait. Humans however love order, and therefore most of our infrastructure consists of straight lines.

- Heat – Many man made objects conduct heat “better” than nature, or at least conduct/produce heat in a more uniform fashion.

- Texture – Many man made objects are smooth or have a uniformly distributed texture, as opposed to a tree with knots, or a rock with bumps.

- Other – There are other differences, but this is enough for now.

Easy to Identify Characteristics

In image processing it is difficult to determine attributes such as texture or heat which would require specialized cameras. Shape and color on the other hand is much easier/cheaper (computationally) characteristics to use in image processing. To assess the shape of an object we can look for lines, corners, regular curves, etc. Particularly, if we are searching for man made objects we should look for lines and corners. There are several ways to do this, and I chose searching for gradience since it is relatively quick to search for. Gradience simply refers to taking the difference between to pixels (likely next to one another). You can then simply ignore any gradience that are too small and you can save the values in an array. You can then check the array to see if the lines are straight (within a margin of error). There are other methods, such as SIFT to identify particular images (there is also feature identification which could be useful), other methods are listed here.

Methods

The methods I am using are currently very rudimentary, however it seems as if it would work in theory. I started with the following image:

The first step I decided to take was to alter the image colors into the LAB color space (YUV would also work). I did this to segregate the light to dark range into it’s own channel, as opposed to being intertwined with each of the RGB channels, this is standard practice for computer vision applications. Beyond the L – light channel, we also get a* and b*, which are represented by the following image,

As you can see on one end of the b* channel we have yellow, the other is blue. Similarly, the a* channel has green on one side and red on the other. After converting the image I obtain the following:

The next step could be determining gradience, clearly the red is represented as bright green in this image and the girl behind me which was wearing a previously light blue jacket is now orange. This creates a problem, since the simple gradience algorithm I was going to use for this experiment does not distinguish between colors. I therefore separated out the a* channel, since magenta and red would both be represented highly in that channel. This means I would also be incorporating the color(s) that are not commonly found in nature inherently in determining the gradience (cool, less computation):

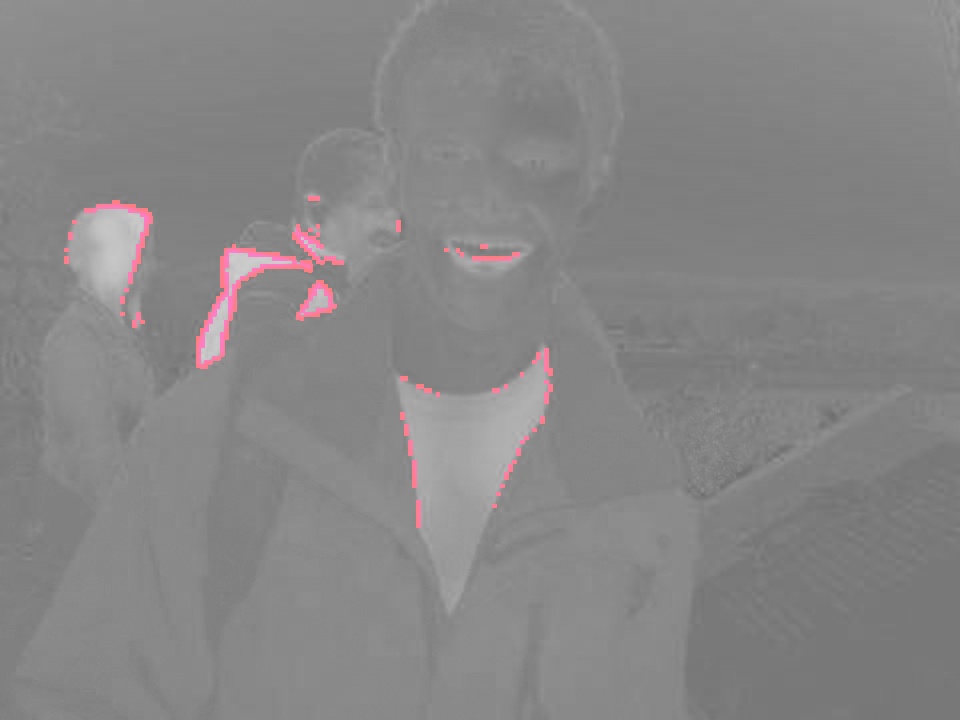

Now that I have filtered out the other colors, I simply run the gradience algorithm:

Bam! Easy detection. As of now, I have not tested or worked on using checking for straight lines since this was just a little side project and I have not had time. However, it seems relatively trivial at this point, and if one decides not to use corners, any parallel lines using this method would almost certainly be man made (food for thought).

For completeness I also have the b* stream:

As well as the b* gradient detection:

There are many more gradients detected in the b* image, which is what we predicted to be the case (since blue and yellow appear often in nature). If this stream was used for man made object detection there would be a large number of false positives. This is exactly why the a* stream is perfect for man made object detection, if pre-filters all colored objects that we are likely not interested in.

At this point I do not have time to complete this project, but I may attempt to finish it by early June. It should be relatively trivial and I could likely find man made object in real time since I can run the unoptimized program at ~25 frames per second on my Pentium 4 computer and identify gradience.

Code:

I apologize for some of the “code smells” I wrote this quickly and intend to test an idea and will clean it up if I create final full version of man made object detection.

RGB to Lab conversion and stream splitting

Recent Articles:

I was talking to Cole about Google Glass’s text recognition algorithm, and apparently it focuses on detecting straight lines, given their rarity in nature.

If you ignore the sky and water, blue is also rare in nature, which might explain why it’s one of the later color words to appear in language.

Irrelevant to computer vision, but it would be interesting to do analysis on worlds in all the languages. You could generate a graph of when they discovered what technologies/who communicated with one another, tracing to root words. It’s probably been done by a Googler or something, but I haven’t seen it yet.